Understanding APPL Function¶

APPL functions are the fundamental building blocks of APPL, marked by the @ppl decorator. As seen in the QA examples, each APPL function is a self-contained module encapsulating LM prompts and Python workflows to realize the functionality.

Difference to Python functions¶

APPL functions are extended from Python functions while designed to seamlessly blend LM prompts with Python codes. You can use Python syntax and libraries in APPL functions as you would in normal Python functions. Beyond normal Python functions, APPL function essentially provides a Prompt Context that specially tailored for LM interactions to a Python function. New features of APPL functions include:

- Prompt Capturing: You can easily define prompts with expression statements within APPL functions.

- Prompt Retrieval: prompts are automatically retrieved when making LM calls. You may also retrieve prompts in the context by predefined functions.

- Context Passing: The prompt context can be passed to other APPL functions with configurable options.

Prompt Context¶

Each APPL function has a prompt context, which is an object that stores the prompts and other information. The context is automatically managed by the APPL framework, and you don't need to worry about it in most cases.

Prompt Capturing¶

As you have seen in the QA examples, you can define prompts with expression statements within APPL functions, including string literals (e.g., "Hello"), formatted strings (e.g., f"My name is {name}"), or more complex expressions. For types that subclass Sequence, such as list and tuple, the elements are recursively captured as prompts one by one. You may also define custom types and the ways to convert them to prompts by subclassing Promptable and implementing the __prompt__ method. See the Appendix for more details.

How about docstrings in APPL functions?

Docstring is a special expression statement in Python. There are two cases for docstrings in APPL functions:

- If the docstring is triple-quoted, it will NOT be captured as a prompt by default. To also include the docstring as a part of the prompt (as a system message or user message), you may specify

docstring_asto be "system" or "user" in the@ppldecorator, like Outputs: - Otherwise, it will be captured as a prompt. But if the content is not meant to be the docstring of the function, it is recommended to use f-string instead.

How about multiline strings in APPL functions?

The multiline strings will be cleaned using inspect.cleandoc before being captured as prompts.

It is recommend that you follows the indentation of the function.

Add image prompt

It's easy to add image prompts in APPL functions by using the Image class. The following example demonstrates how to add this example image as part of prompt in an APPL function.

from os import PathLike

from pathlib import Path

import PIL.Image

from appl import Image, gen, ppl

@ppl

def query_text(image_url: str):

"Look, it's a commercial logo."

Image(image_url)

"What's the text on the image? "

"Your output should be in the format: The text on the image is: ..."

return gen("gpt4o-mini", stop="\n")

IMAGE_URL = "https://maproom.wpenginepowered.com/wp-content/uploads/OpenAI_Logo.svg_-500x123.png"

# print(query_text(IMAGE_URL))

# The text on the image is: OpenAI

@ppl

def query_image(image_file: PathLike):

"Which python package is this?"

Image.from_file(image_file)

# PIL.Image.open(image_file) # alternative, APPL recognizes an ImageFile

return gen("gpt-4o-mini", stop="\n")

image_file = Path(__file__).parent / "pillow-logo-dark-text.webp"

print(query_image(image_file))

# Pillow

Explicitly capture prompts

If you want to capture prompts explicitly, simply use the grow function to append the content to the prompt.

from appl import gen, grow, ppl

@ppl

def count(word: str, char: str):

grow(f"How many {char}s are there in {word}?")

return gen()

In other words, there is an implicit grow function wrapped around the expression statements in APPL functions.

Pay attention to return values of function calls

Function calls are also expression statements, which means their return values (when not None) may be captured as prompts based on the type. To avoid capturing the return value, you may write it as a assignment statement, for example when calling the pop function : _ = {"example": "Hello World"}.pop("example").

Prompt Retrieval¶

Similar to the local and global variables in Python (retrieved with locals() and globals() functions, respectively), you can retrieve the prompts captured in the current function (with records()) or the full conversation in the context (with convo()). This example demonstrates how to retrieve the prompts captured in the current function and the full conversation in the context.

When making LM calls using gen(), the full conversation is automatically retrieved from the context as the prompt. Therefore, instead of passing the prompt explicitly, the position of the gen() function within the APPL function determines the prompt used for the generation.

Context Passing¶

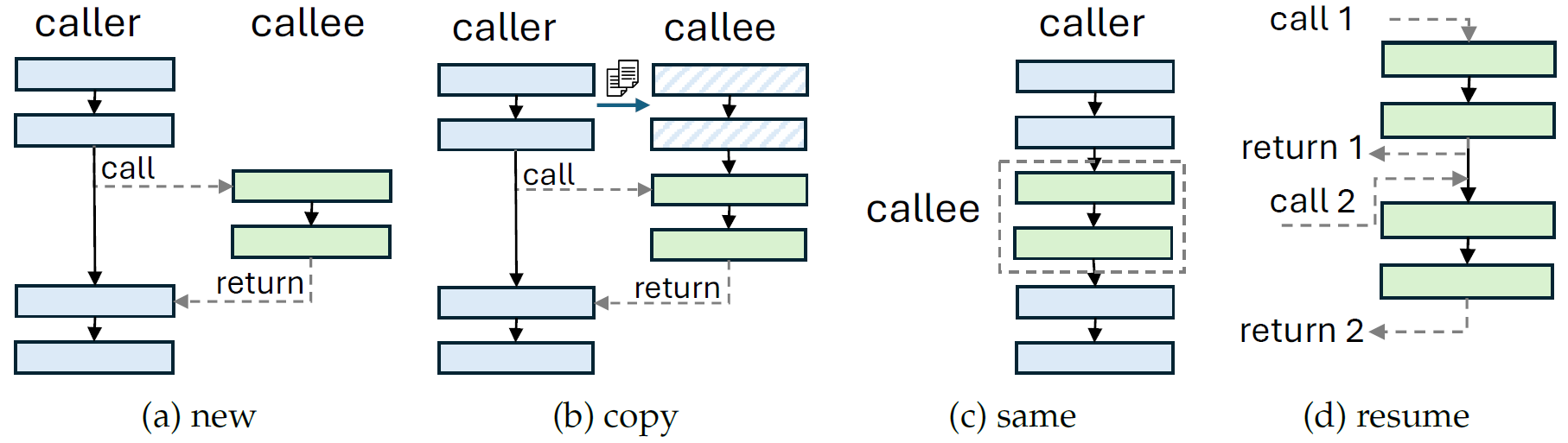

There are four different ways to pass the context when calling another APPL function (the callee) in an APPL function (the caller): new, copy, same, and resume.

- new: The default behavior, create a new empty context.

- copy: This is similar to call by value in programming languages. The callee's context is a copy of the caller's context, therefore the changes in the callee's context won't affect the caller's context.

- same: This is similar to call by reference in programming languages. The callee's context is the same as the caller's context, therefore the changes in the callee's context will affect the caller's context.

- resume: This resumes the context of the function each time it is called, i.e., the context is preserved across calls, making the function stateful. It copies the caller's context for the first call as the initial context. It is useful when you want to continue the conversation from the last call.

With these context management methods, you can now easily modularize your prompts as well as the workflow, so that they are more readable and maintainable.

Example¶

In this example, we illustrate the usage of the first three context management methods and ways to decompose long prompts into smaller pieces using APPL functions. For the resume method, please refer to the multi-agent chat example.

- Use new context, the default method for passing context.

- Local prompts are returned as a list of records, which can be add back to the caller's context.

- Use the caller's context, which contains the prompt

Today is 2024/02/29.. - The newly captured prompt influences the caller's context, now the caller's prompts contain both

Today is 2024/02/29.andDates should be in the format of YYYY/MM/DD.. - Copy the caller's context, the prompts captured here will not influence the caller's context.

- Display the global prompts (

convo(), means conversation) used for the generation (gen()). - The callee returns

PromptRecordscontaining sub-prompts, which are added to the caller's context. - The callee returns

None, the return value is not captured, but the context is already modified inside the callee.

Three queries are independent and run in parallel, where the prompts and possible responses of the generations are shown below:

Prompt:

Output will looks like2024/03/01.

Prompt:

Output will looks like2024/02/28.

Caveats¶

@ppl needs to be the last decorator.

Since @ppl involves compiling the function, it should be the last decorator in the function definition, i.e. put it closest to the function definition. Otherwise, the function may not work as expected.

@ppl cannot be nested.

Currently, @ppl cannot be nested within another @ppl function. You may define the inner function as a normal Python function and call it in the outer @ppl function.